Learning from “Intelligent Failures”

Failure is neither always bad nor always good – what matters is the context.

Any response to failure must be tuned to the context within which it occurs.

Especially if we want to encourage positive behaviours of our people and teams in the increasingly complex operational environment.

Is it possible to reconceptualise the value of failure to our organisation and operational effectiveness?

The focus of this piece is complex failure, and its valuable counterbalance known as ‘intelligent failures’.

What I’m not talking about are the preventable failures – those caused by deviations from accepted procedures

Air Force Relationship with Failure

Failure within the Air Force is generally not an option that is welcomed. Failure is indeed bad in certain contexts. However, given the complex and uncertain nature of military operations, jumping to simple explanations can neglect the nuance of the situation, opportunities for learning and ways of gaining advantage.

Our understanding of failure is coloured by our experience in training, aviation safety and risk management settings. For instance, trainees passing or failing against performance metrics for fitness, weapon safety and courses. In an aviation safety setting, failure is dealt with in a dry, technical way – part failure, procedural failure, system failure.

And within risk management, failure is related to the non-achievement of an outcome.

Failure is something to be detected, reported, analysed and managed. And the risk of failure is to be controlled (to avoid it) or mitigated (to respond to it). All of this adds up to a strong, negative conception of failure for the aviator. It encourages failure avoidance at all costs – which leaves opportunity on the table, could lead to hiding failure or potentially punishment for individuals responsible for failure.

In spite of nice sentiments about learning from failure’s lessons and the sprinkling of thought leaders within the organisation that embody this, the brutal fact is that it simply is not valued by the organisation.

For instance, failure is dealt with in a superficial way in both the leadership and learning doctrines, providing little nuance for the military professional. Other hints come from examining how we view the success of our people. Success is fundamental to our promotion, performance reporting and awards systems. We format individual reporting narratives with templates like ‘Did-Achieved-Demonstrated’ and previously ‘Situation-Task-Action-Result’.

What this emphasises, is that behaviour is valued on the basis of achievement, results and demonstrable outcomes. This prevents the possibility to set conditions for a possible future whose results may not be apparent for some time. The preference then is for the present, the status-quo. The shallow narratives that we craft, however, leave the reader with no appreciation of the means for how these results came about.

They are also prone to cognitive biases, for example, the ‘narrative fallacy’ which is the tendency to link unrelated events into a narrative and impose a pattern of causality. This cognitive trap typically overestimates the role of skill and underestimates the role of luck as factors of success.

The Challenge

How is this resolved against the overwhelming body of evidence that has said for decades that ‘failure’ is neither a synonym for ‘incompetence’ nor always bad?

There is a conflict between what is known within contemporary literature as being productive and our reflexive response to failure.

And how do we progress from here, so that we can actively promote and nurture valuable ‘intelligent failure’ that acts as a counterbalance to complex failure?

The Complexity-Failure Relationship

Our operations and capabilities grow ever more complex – and this is especially apparent within competition below the threshold of conflict. Competition is about gaining and maintaining relative advantage. It is a non-linear (temporally and in effect), continuous process that is not addressed via technological superiority.

The expectation from our political and military leadership is that we must advance national interests in contexts that challenge our mental ‘map’ of the world. This is because ‘[our] map is not the actual territory’.

What that means for us, is that we are spending more time at the ‘frontier’ of the profession of arms – where we leave the solid ground of ‘best practice’ and even ‘good practice’. Productive reactions to complexity flow in the order of ‘probe-sense-respond’. It is about pushing forward through the darkness of our understanding and illuminating as far forward as we can.

This can be thought of as an experimental process during which we will of course face failure– but we can fail intelligently, too.

‘Safe to fail’ Experiments

The good news is that we don’t have to be scientists to carry out experiments at the frontier to generate novel practices. There is room for experimentation at all levels of the Air Force and in every workplace. Intelligent failures which result from experiments at the frontier provide valuable new knowledge that can help us leap ahead of a competitor or adversary.

These experiments are small and pragmatic, they outline a direction and not a destination and have a focus on learning and sharing ideas. It’s a good idea to design the experiment so that the stakes are lowered as far as possible – and in some instances consequences may even be reversible.

Experiments can be playful or run in parallel. Taking small, pragmatic steps toward a new direction can help constrain uncertainty and illuminate new ground of the frontier. The preferred direction could be called ‘mission command’ in the military sense; and in the business world, a shared vision of the future guides these experiments.

Importantly, safe to fail experiments must be underpinned by Psychological Safety – the absence of interpersonal fear. That is, team members have a safe space to give constructive, critical feedback to surface errors and identify novel opportunities for improvement.

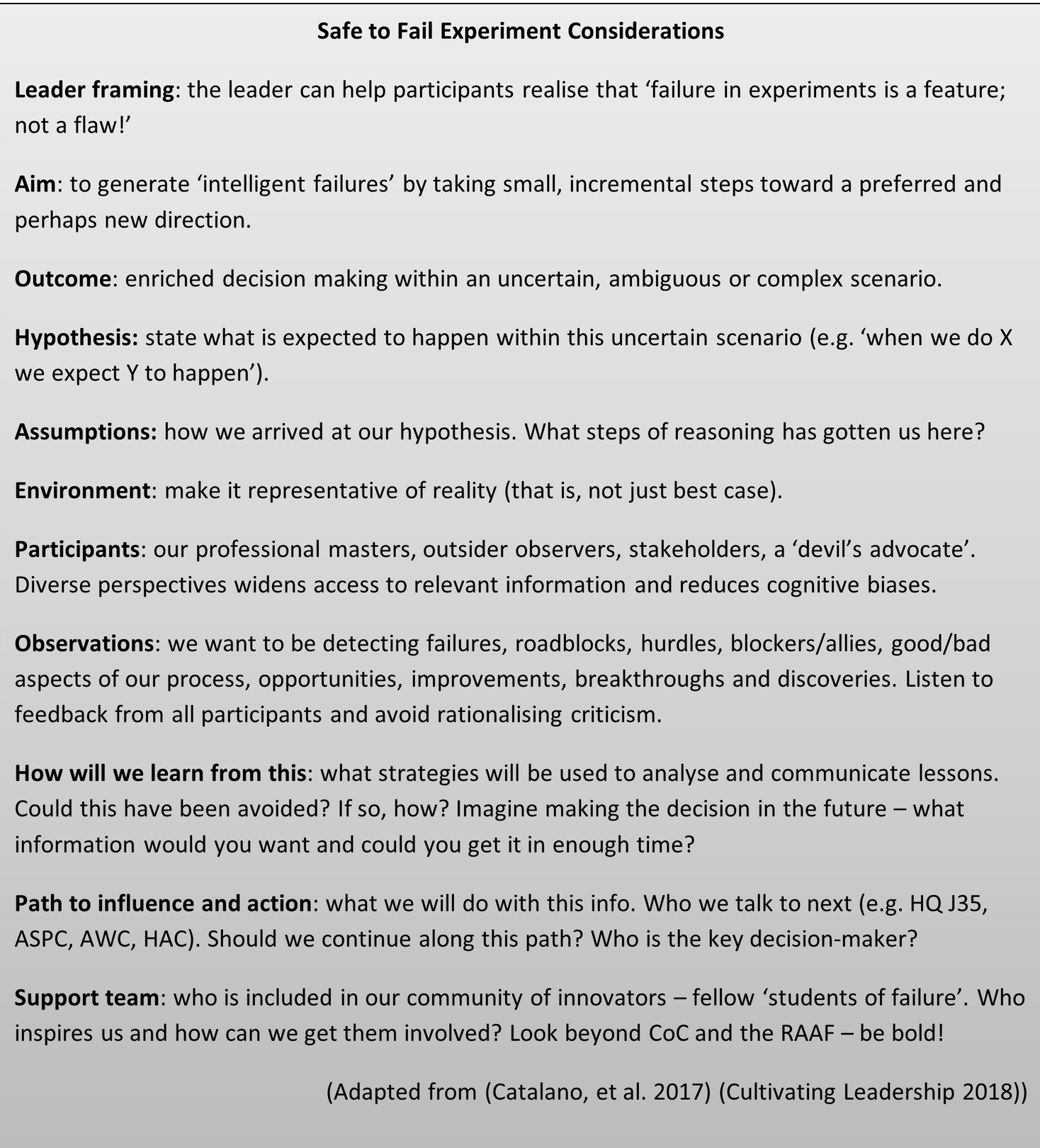

Safe to Fail Experiment Considerations

I offer the below approach to carrying out experiments at the frontier adapted from the body of knowledge referenced so far. These experiments are investments into understanding a possible future state. Importantly, having a window into a potential future informs planning, management and decision making.

I know from experience as a structural integrity engineer, however, that experiments will never be fully representative of real life and all the possible conditions faced in the future. Safe to fail experiments are also crucial investments in our people’s capacity to deal with ambiguity, uncertainty and complexity. It is imperative, then, that we continue to empower our professional masters to boldly explore uncharted territories.

The Value of Failure to the Air Force

We have an opportunity to reframe failure in a way that better prepares us for complexity and enables us to seize advantage that comes from ambiguity and novel situations.

This is possible through the design and conduct of “safe to fail” experiments that produce intelligent failures. These valuable failures enhance decision making and help us navigate at the frontier of our professional mastery. As well as enriching our decision making, these experiments are productive ways to grow the capacity of our aviators to grapple with ambiguity and complexity.

As members of the profession of arms, it is our responsibility to continue to courageously illuminate new frontiers so that we can further national objectives.

Biography

In an attempt to avoid the Dunning-Kruger Effect, Chris is sticking to what he knows – failure. As a structural integrity engineer, he has had a career focused on detecting, analysing and learning from failures. Understanding what can go wrong and influencing decision making at all levels of the Air Force has been crucial in keeping our personnel safe and capabilities effective.

This article was published by Central Blue on May 28, 2022.