Recently, a team of researchers made headlines for a stunning announcement: a theoretical breakthrough that expanded our understanding of the first law of thermodynamics. But to understand that result, we first have to appreciate why the laws of thermodynamics are so fantastically important, critical—and limited.

The laws as we know them first began to coalesce in the early 19th century, as physicists tried to puzzle out the inner workings of steam engines. The laws that those early scientists wrote down were empirical, which means they were not based on any grand theory of the universe, but on cold, hard experimental verification. In other words, they wrote down these statements because, after years of repeated experiments, they always saw these statements holding true.

✅ Know Your Terms: Thermodynamics is the study of the effects of work, heat, and energy on a system; it’s only concerned with large-scale responses of a system, which we can observe and examine through experiments, according to NASA.

Today, modern physics has a much more fundamental and sophisticated view of thermodynamics, a view based on the statistical properties of countless microscopic particles. Therefore, we now have explanations for most of these laws, which still hold today.

The First Law of Thermodynamics: Nothing’s for Free

Energy can neither be created nor destroyed in isolated systems. This is the conservation of energy, and it stands as a bedrock beneath all of physics. It’s a foundation principle that allows us to explain almost every aspect of material existence. And thermodynamics is no exception. The first law of thermodynamics is just an expression of energy conservation written for . . . well, thermodynamic systems.

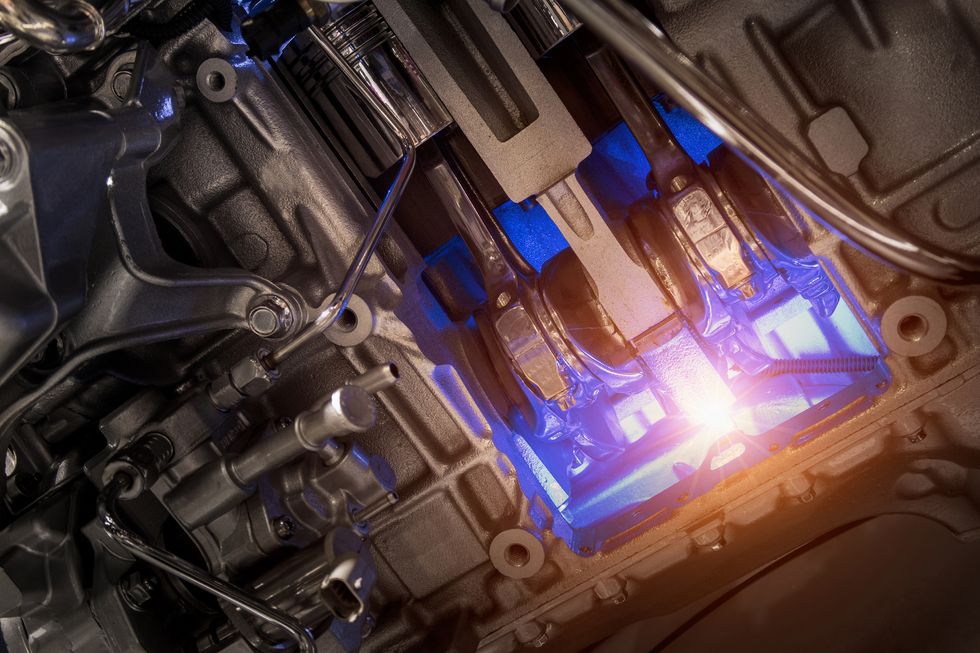

If we examine a system—e.g., the air inside your room, the cylinder in your car engine, a cloud of gas surrounding a newborn star, a crystal lattice in a laboratory experiment—then we can add up all the energy in that system. There might be kinetic energy if the whole system is moving, and there might be potential energy too (the energy stored in a system as a result of its position). Thermodynamics adds a new quantity, called internal energy, into the fold; it’s the energy possessed by the system itself.

If we add heat to the system, its internal energy increases. If the system does work on its surroundings (like pushing on a piston in your car engine), then its internal energy decreases.

The first law states that heat and work are the only ways to change the energy of an isolated system, and that a system’s internal energy is the only kind “available” for useful work. But the first law isn’t just a bland statement, it’s also a workhorse: it allows us to calculate engine efficiency, keep track of work, and generally keep good accounting of all the ins and outs of a system we’re trying to understand.

The Second Law: Chaos Reigns Supreme

If I put a glass of ice on the counter, the room doesn’t freeze. Instead, the ice melts. Heat always moves from warmer objects to colder objects, and this simple observation forms the basis of the second law of thermodynamics.

The second law is the place where we encounter a new property of systems: entropy. The entropy of a system is a measure of the number of different ways that the internal microscopic components of a system can rearrange themselves and leave everything else the same. For example, the air in the room you’re sitting in has a lot of energy, so much so that the air molecules are constantly buzzing around, bumping into each other, and rearranging themselves. But despite all these never-ending microscopic changes, the room full of air is still the room full of air, with the same temperature, pressure, and volume. The air has a relatively high entropy, meaning that there are a lot of ways for the air molecules to rearrange themselves without you noticing.

On the other end of the spectrum, a group of extremely cold atoms in a solid don’t have a lot of energy, and so they can’t really move around all that much. Without a lot of options available, their entropy is low.

According to the second law, entropy in a closed system always increases. If I bring a cold object into contact with a warm object, the two will find a common temperature, and the overall entropy of the new system will be greater than the sum of the entropy of the isolated systems. Since entropy is also a measure of disorder, another way of stating the second law is to say that disorder in isolated systems only grows with time.

The Third Law: Failure Is Not an Option

The last of the laws of thermodynamics tells us about an important relationship between entropy and temperature. Specifically, the law states that there is a special temperature, known as absolute zero, where the entropy is also equal to zero. Plus, the law states that it’s impossible to bring a system all the way to absolute zero.

To understand the first part, imagine you have a solid block of ice. Even at very low temperatures, the water molecules can jiggle around a little. Since they have some options of where to be while maintaining their overall ice-identity, the entropy isn’t zero. But if you cooled the ice all the way down to absolute zero, the molecules would become locked in place with no wiggle room at all. There would be no additional ways to rearrange them—because they’re locked in place—and so the entropy also goes to zero.

This part of the law is essential because entropy isn’t something we can directly measure; it’s only a quantity that we can calculate relative to some baseline. Absolute zero gives us that baseline.

💡Did You Know? In the far future, the universe will “approach” absolute zero, but never actually reach it. Entropy will keep growing as matter gets more and more disordered and loses the ability to do work.

But if we want to bring a system down to absolute zero, we actually have to, you know, touch it. Interact with it. Do something to it. The third law tells us that the closer a system gets to absolute zero, the harder we have to work to get it even closer. Every time we interact with a system, we destabilize it just a little bit, transferring just a tiny bit of energy. And the lower the energy of the system, the more relatively clumsy our attempts become. There’s just no way to reach absolute zero.

The Zeroth Law: Starting Somewhere

Surprise! Decades after the formulation of the three laws of thermodynamics, physicists realized that they needed to add a fourth. But this one was so important, so basic, and so fundamental that they couldn’t just tack it on to the end. So here we have the zeroth law: if we have two systems, and each of them are in equilibrium with a third system, then those two systems are also in equilibrium with each other.

At first blush, the zeroth law looks like a rather bland bit of nerdy bookkeeping, but it’s actually a clever bit of insight. It establishes the concept of equilibrium—it’s the thing that becomes the same when two systems are in contact and have settled down together. Since the laws of thermodynamics concern themselves with systems in equilibrium, it seems kind of important to define what that is. Plus, this strategy allows us to define other measurable properties of a system, like temperature. In order to measure a temperature, we have to bring our measurement device into equilibrium with the system we’re studying; when two systems are in equilibrium, by definition they have the same temperature.

Studies of out-of-equilibrium systems take up the bulk of thermodynamics research today. For example, by putting the zeroth law to the side, physicists can study exotic structures like time crystals (which are crystalline patterns that repeat in time), the formation of snowflakes, and protein folding and the work of membranes in cells.

Paul M. Sutter is a science educator and a theoretical cosmologist at the Institute for Advanced Computational Science at Stony Brook University and the author of How to Die in Space: A Journey Through Dangerous Astrophysical Phenomena and Your Place in the Universe: Understanding Our Big, Messy Existence. Sutter is also the host of various science programs, and he’s on social media. Check out his Ask a Spaceman podcast and his YouTube page.